A gentle intro to large language models: architecture and examples

What are large language models?

Large language models (LLMs) are machine learning models trained on vast amounts of text data. Their primary function is to predict the probability of a word given the preceding words in a sentence. This ability makes them powerful tools for various tasks, from creative writing, to answering questions about virtually any body of knowledge, and even generating code in various programming languages.

These models are “large” because they have many parameters – often in the billions. This size allows them to capture a broad range of information about language, including syntax, grammar, and some aspects of world knowledge.

The most well-known example of a large language model is GPT-3, developed by OpenAI, which has 175 billion parameters and was trained on hundreds of gigabytes of text. In April 2023, OpenAI released its next-generation LLM, GPT-4, considered the state of the art of the technology today. It is available to the public via ChatGPT, a popular online service.

Other LLMs widely used today are PaLM 2, developed by Google, which powers Google Bard, and Claude, developed by Anthropic. Both Google and Meta are developing their own next-generation LLMs, called Gemini and LLaMA, respectively.

Large language models are part of the broader field of natural language processing (NLP), which seeks to enable computers to understand, generate, and respond to human language in a meaningful and efficient way. As these models continue to improve and evolve, they are pushing the envelope of what artificial intelligence can do and how it impacts our lives and human society in general.

This is part of a series of articles about generative AI.

Large language model architecture

Let’s review the basic components of LLM architecture:

The embedding layer

The embedding layer is the first stage in a large language model. Its job is to convert each word in the input into a high-dimensional vector. These vectors capture the semantic and syntactic properties of the words, allowing words with similar meanings to have similar vectors. This process enables the model to understand the relationships between different words and use this understanding to generate coherent and contextually appropriate responses.

Positional encoding

Positional encoding is the process of adding information about the position of each word in the input sequence to the word embeddings. This is necessary because, unlike humans, machines don’t inherently understand the concept of order. By adding positional encoding, we can give the machine a sense of the order in which words appear, enabling it to understand the structure of the input text.

Positional encoding can be done in several ways. One common method is to add a sinusoidal function of different frequencies to the word embeddings. This results in unique positional encodings for each position, and also allows the model to generalize to sequences of different lengths.

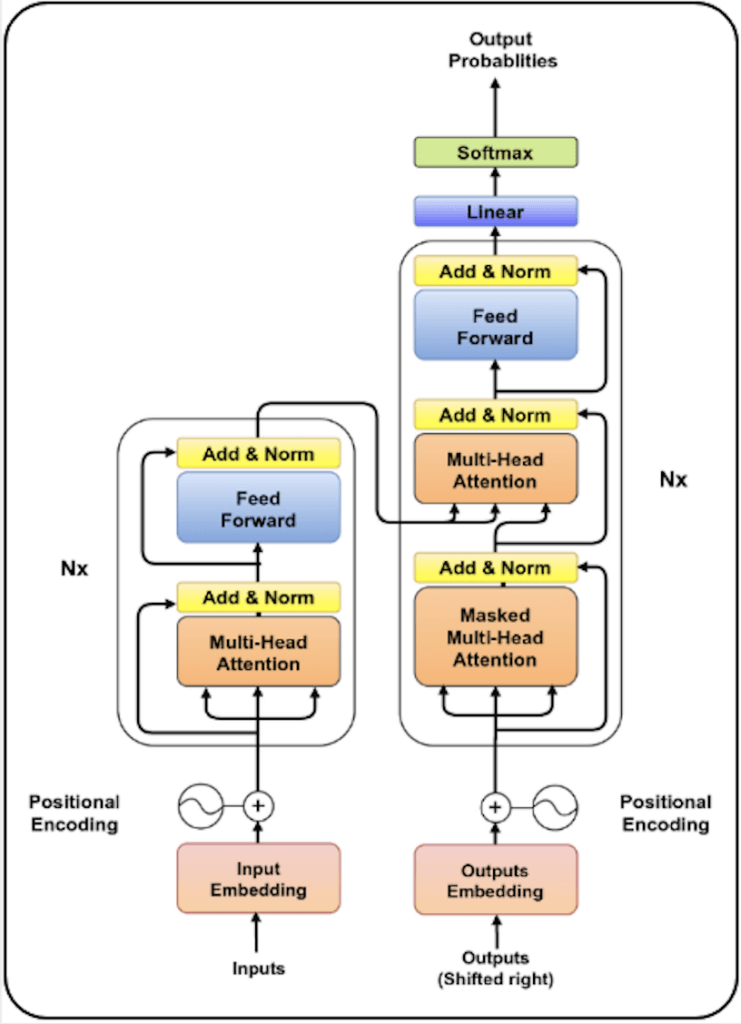

Transformers

Transformers are the core of the LLM architecture. They are responsible for processing the word embeddings, taking into account the positional encodings and the context of each word. Transformers consist of several layers, each containing a self-attention mechanism and a feed-forward neural network.

Source: ResearchGate

The self-attention mechanism allows the model to weigh the importance of each word in the input sequence when predicting the next word. This is done by calculating a score for each word based on its similarity to the other words in the sequence. The scores are then used to weight the contribution of each word to the prediction.

The feed-forward neural network is responsible for transforming the weighted word embeddings into a new representation that can be used to generate the output text. This transformation is done through a series of linear and non-linear operations, resulting in a representation that captures the complex relationships between words in the input sequence.

Text Generation

The final step in the LLM architecture is text generation. This is where the model takes the processed word embeddings and generates the output text. This is commonly done by applying a softmax function to the output of the transformers, resulting in a probability distribution over the possible output words. The model then selects the word with the highest probability as the output.

Text generation is a challenging process, as it requires the model to accurately capture the complex relationships between words in the input sequence. However, thanks to the transformer architecture and the careful preparation of the word embeddings and positional encodings, LLMs can generate remarkably accurate and lifelike text.

Use cases for large language models

Large language models have a wide range of use cases. Their ability to understand and generate human-like text makes them incredibly versatile tools.

Content generation and copywriting

These models can generate human-like text on a variety of topics, making them excellent tools for creating articles, blog posts, and other forms of written content. They can also be used to generate advertising copy or to create persuasive marketing messages.

Programming and code development

By training these models on large datasets of source code, they can learn to generate code snippets, suggest fixes for bugs, or even help to design new algorithms. This can greatly speed up the development process and help teams improve code quality and consistency.

Chatbots and virtual assistants

These models can be used to power the conversational abilities of chatbots, allowing them to understand and respond to user queries in a natural, human-like way. This can greatly enhance the user experience and make these systems more useful and engaging.

Language translation and linguistic tasks

Finally, large language models can be used for a variety of language translation and linguistic tasks. They can be used to translate text from one language to another, to summarize long documents, or to answer questions about a specific text. LLMs are used to power everything from machine translation services to automated customer support systems.

Types of large language models

Here are the main types of large language models:

Autoregressive

Autoregressive models are a powerful subset of LLMs. They predict future data points based on previous ones in a sequence. This sequential approach allows autoregressive models to generate language that is grammatically correct and contextually relevant. These models are often used in tasks that involve generating text, such as language translation or text summarization, and have proven to be highly effective.

Autoencoding

Autoencoding models are designed to reconstruct their input data, making them ideal for tasks like anomaly detection or data compression. In the context of language models, they can learn an efficient representation of a language’s grammar and vocabulary, which can then be used to generate or interpret text.

Encoder-decoder

Encoder-decoder models consist of two parts: an encoder that compresses the input data into a lower-dimensional representation, and a decoder that reconstructs the original data from this compressed representation. This architecture is especially useful in tasks like machine translation, where the input and output sequences may be of different lengths.

Bidirectional

Bidirectional models consider both past and future data when making predictions. This two-way approach allows them to understand the context of a word or phrase within a sentence better than their unidirectional counterparts. Bidirectional models have been instrumental in advancing NLP research and have played a crucial role in the development of many LLMs.

Multimodal

Multimodal models can process and interpret multiple types of data – like text, images, and audio – simultaneously. This ability to understand and generate different forms of data makes them incredibly versatile and opens up a wide range of potential applications, from generating image captions to creating interactive AI systems.

Examples of LLM Models

Let’s look at specific examples of large language models used in the field.

1. OpenAI GPT series

The Generative Pretrained Transformer (GPT) models, developed by OpenAI, are a series of language prediction models leading the research on LLMs in recent years. GPT-3, released in 2020, has 175 billion machine learning parameters and can generate impressively coherent and contextually relevant text.

In December 2022, OpenAI released GPT-3.5, which uses reinforcement learning from human feedback (RLHF) to generate longer and more meaningful responses. This model was the basis for the first version of ChatGPT, which went viral and captured the public’s imagination about the potential of LLM technology.

In April 2023, GPT-4 was released. This is probably the most powerful LLM ever built, with significant improvements to quality and steerability (the ability to generate specific responses with more nuanced instructions. GPT-4 has a larger context window, can process conversations of up to 32,000 tokens, and has multi-modal capabilities, so it can receive both text and images as inputs.

2. Google PaLM

Google’s PaLM (Pathways and Language Model) is another notable example of LLMs, and is the basis for the Google Bard service, an alternative to ChatGPT.

The original PaLM model was trained on a diverse range of internet text. However, unlike most other large language models, the PaLM model was also trained on structured data, including tables, lists, and other forms of structured data available on the internet. This gives it an edge in understanding and generating text that involves structured data.

Its latest version, PaLM 2, has 540 billion parameters. It achieves improved training efficiency, which is critical for such a large model, by updating the Transformer architecture to allow attention and feed-forward layers to be computed in parallel. PaLM 2 has significantly improved language understanding, language generation, and reasoning capabilities.

3. Anthropic claud

Anthropic Claud is another exciting example of a large language model. Developed by Anthropic, a research company co-founded by OpenAI alumni, Claud is designed to generate human-like text that is not only coherent but also emotionally and contextually aware.

Claud’s major innovation is that it offers a huge context window – it can process conversations of up to 100,000 tokens (around 75,000 words). This is the largest context window of any LLM to date, and opens new applications, such as providing entire books or very large documents and performing language tasks based on their entire contents.

4. Meta LLaMA 2

Meta’s LLaMA 2 is a free-to-use large language model. With a parameter range from 7B to 70B, it provides a flexible architecture suitable for various applications. LLaMA 2 has been trained on 2 trillion tokens, which enables it to perform highly in reasoning, coding proficiency, and knowledge tests.

Notably, LLaMA 2 has a context length of 4096 tokens, double that of its predecessor, LLaMA 1. This increased context length allows for more accurate understanding and generation of text in longer conversations or documents. For fine-tuning, the model incorporates over 1 million human annotations, enhancing its performance in specialized tasks.

Two notable variants of LLaMA 2 are Llama Chat and Code Llama. Llama Chat has been fine-tuned specifically for conversational applications, utilizing publicly available instruction datasets and a wealth of human annotations. Code Llama is built for code generation tasks and supports a wide array of programming languages such as Python, C++, Java, PHP, Typescript, C#, and Bash.

Tabnine: enterprise-grade programming assistant based on Large Language Models

Tabnine is an AI coding assistant used by over 1 million developers from thousands of companies worldwide, based on GPT architecture. It provides contextual code suggestions that boost productivity, streamlining repetitive coding tasks and producing high-quality, industry-standard code. Unlike using generic tools like ChatGPT, using Tabnine for code generation or analysis does not require you to expose your company’s confidential data or code, does not give access to your code to train another company’s model, and does not risk exposing your private requests or questions to the general public.

Unique enterprise features

Tabnine’s code suggestions are based on Large Language Models that are exclusively trained on credible open-source repositories with permissive licensing. This eliminates the risk of introducing security risks or intellectual property violations in your generated code. With Tabnine Enterprise, developers have the flexibility to run our AI tools on-premises or in a Virtual Private Cloud (VPC), ensuring you retain full control over your data and infrastructure (complying with enterprise data security policies) while leveraging the power of Tabnine to accelerate and simplify software development and maintenance.

Tabine’s advantages for enterprise software development teams:

- Tabine is trained exclusively on permissive open-source repositories

- Tabnine’s architecture and deployment approach eliminates privacy, security, and compliance risks

- Tabine’s models avoid copyleft exposure and respect developers’ intent

- Tabnine can be locally adapted to your codebase and knowledge base without exposing your code to a third-party

In summary, Tabnine is an AI code assistant that supports development teams leveraging their unique context and preferences while respecting privacy and ensuring security. Try Tabnine for free today or contact us to learn how we can help accelerate your software development.