Table of contents

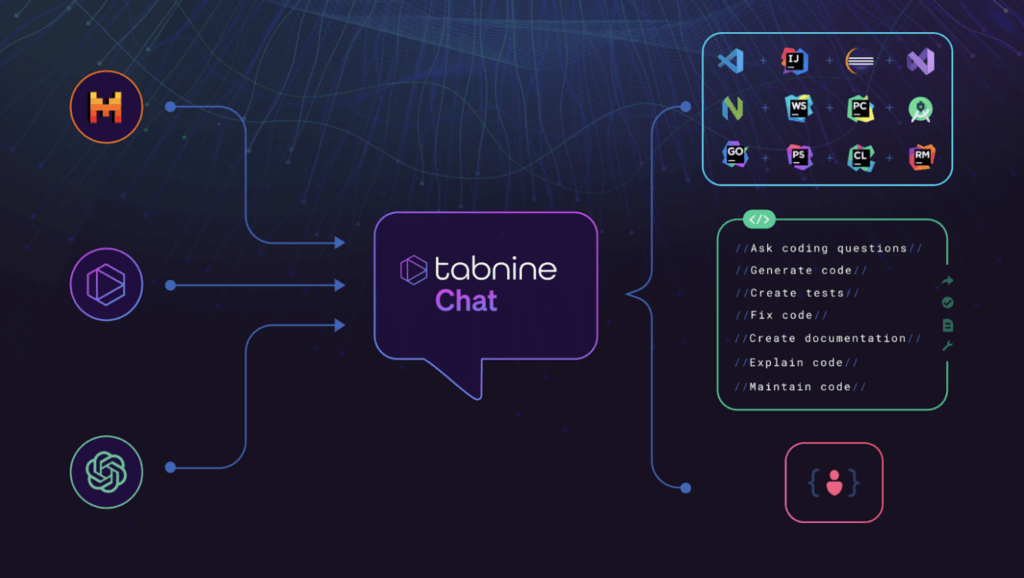

Switchable models come to Tabnine Chat

//

We’re thrilled to unveil a powerful new capability that puts you in the driver’s seat when using Tabnine. Starting today, you can switch the underlying large language model (LLM) that powers Tabnine’s AI chat at any time. In addition to the built-for-purpose Tabnine Protected model that we custom-developed for software development teams, you now have access to additional models from Open AI and a new model that brings together the best of Tabnine and Mistral, the leading open source AI model provider.

You can choose from the following models with Tabnine’s AI software development chat tools:

- Tabnine Protected: Tabnine’s original model, designed to deliver high performance without the risks of intellectual property violations or exposing your code and data to others.

- Tabnine + Mistral: Tabnine’s newest offering, built to deliver the highest class of performance while still maintaining complete privacy.

- GPT-3.5 Turbo and GPT-4.0 Turbo: The industry’s most popular LLMs, proven to deliver the highest levels of performance for teams willing to share their data externally.

More importantly, you’re not locked into any one of these models. Switch instantly between models for specific projects, use cases, or to meet the requirements of specific teams. No matter which LLM you choose, you’ll always benefit from the full capability of Tabnine’s highly tuned AI agents.

Today’s announcement comes on the heels of our recent major update that delivers highly personalized AI recommendations. Together, these enhancements enable you to maximize the value that you get from Tabnine.

Why do users need flexibility to choose models?

Generative AI has gone mainstream in just a couple of years, and developments continue to unfold rapidly. More powerful LLMs are regularly being introduced in the market. As we’ve seen in past innovation cycles, increased competition brings new releases that challenge the supremacy of older releases — sometimes from a different company or sometimes within the same provider. In addition, new vendors continue to enter the market, each with characteristics that are desirable or undesirable for their intended users.

Historically, when an engineering team selects an AI software development tool, they pick both an application (the AI coding assistant) and the underlying model (e.g., Tabnine’s custom model, GitLab’s use of Google’s Vertex Codey, Copilot’s use of OpenAI). But what if an engineering team has different expectations or requirements than the parameters used to create that model? What if they want to take advantage of an innovation produced by an LLM other than what powers their current AI coding assistant? What if one model is better for one project or use case, but another is better for a different project? What if customers want to use LLMs that they trained themselves with the AI coding assistant?

Ideally, engineering teams should be able to use the best model for their use case without having to run multiple AI assistants or switch vendors. They should be able to choose the LLMs under their favorite tools based on a model’s performance, its privacy policies, and the code the model was trained on. They should be able to benefit from advancements in new LLMs without having to install, integrate, deploy, and train their users on a different tool. To date, all of this has not been possible with the best-of-breed AI coding assistants. Tabnine is changing that with today’s announcement.

Future-proof your AI investment and avoid LLM lock-in

Tabnine has supported unique models under our AI coding assistant for some time — Tabnine Enterprise customers can run their own fine-tuned model that’s trained on their unique code. With this inherent flexibility, we can bring state-of-the-art models from various sources into Tabnine with relative ease. As such, on top of the four models available today, we will soon introduce support for even more models to Tabnine Chat.

Switchable models give engineering teams the best of two worlds: the rich understanding of programming languages and software development methods native within the LLMs, along with the years of effort Tabnine has invested in creating a developer experience and AI agents that best exploit that understanding and fit neatly into development workflows.

LLMs are a critical component of any AI software development, but they’re ineffective assistants if used solely on their own. Tabnine has created highly advanced, proprietary methods to maximize the performance, relevance, and quality of output from the LLM, including complex prompt engineering, intimate awareness of the local code and global codebase to benefit from the context of a user’s or team’s existing work, and fine-tuning of the experience for specific tasks within the software development life cycle. By applying that expertise uniquely to each LLM, Tabnine’s users get an AI coding assistant that makes optimal use of each model. And with Tabnine’s ongoing support for all of the most popular IDEs and integrations with popular software development tools, engineering teams get an AI assistant that works within their existing ecosystem.

Tabnine eliminates any worry about missing out on LLM innovations and makes it simple to use new models as they become available. Tabnine future-proofs your investment in accelerating and simplifying software development using AI.

Selecting models for each situation

Selecting the right model for your team or project is not as simple as picking the most popular one. Adopting AI tools comes with critical decisions around data and code privacy, data storage and residency, and intellectual property considerations. Privacy concerns and the business risks of license and copyright violations are the top reasons many companies have resisted rolling out generative AI tools.

When picking the underlying LLMs to be used in your AI software development tools, there are three main considerations:

- Performance: Does the model provide accurate, relevant results for the programming languages and frameworks I’m working in right now?

- Privacy: Does the model store my code or user data? Could my code or data be shared with third parties? Is my code used to train their model?

- Protection: What code was the model trained on? Is it all legally licensed from the author? Will I create risks for my business by accepting generated code from a model trained on unlicensed repositories?

During model selection, Tabnine provides transparency into the behaviors and characteristics of each of the available models to help you decide which one is right for your situation.

Switch models at will

Tabnine offers users the utmost flexibility and control over choosing their models for Tabnine Chat:

- Tabnine Pro users specify their preferred model the first time they use Chat, and can change it at any time. For projects where data privacy and legal risks are less important, you can use a model optimized for performance over compliance. As you switch to working on projects that have stricter requirements for privacy and protection, you can change to a model like Tabnine Protected that is built for that purpose. The underlying LLM can be changed with just a few clicks — and Tabnine Chat adapts instantly.

- Tabnine Enterprise administrators control and specify the models that are available at their organization. Administrators set default models for their teams. Enterprises often make strategic bets on using specific models across their organization. This update helps make Tabnine compatible with your chosen LLM and a part of its ecosystem and makes it easier for you to get the most out of Tabnine without evolving your LLM strategy.

Regardless of the model selected, engineering teams get the full capability of Tabnine, including code generation, code explanations, documentation generation, and AI-created tests — and Tabnine is always highly personalized to each engineering team through both local and full codebase awareness.

How to take advantage of switchable models for Tabnine Chat

There’s no additional cost to use any of the models for Tabnine Chat. Starting today, every Tabnine Pro user has access to all the models and can pick the model they prefer. Tabnine Enterprise users can contact our Support team and specify the models that should be enabled for their organization.

Check out our Docs to learn more about this announcement. If you’re not yet a customer, you can sign up for Tabnine Pro today — it’s free for 90 days.